Opening and Key Highlights of Google Beam

Opening and Key Highlights of Google Beam

Hello everyone. Good morning. Welcome to Google IO. We want to get our best models into your hands. We have announced over a dozen models and research breakthroughs and released over 20 major AI products and features all since the last IO.

AI Integration and Growth

AI adoption is increasing across our products. The Gemini app now has over 400 million monthly active users. We are seeing strong growth and engagement, particularly with 2.5 models. We’re also seeing incredible momentum in search today. AI overviews have more than 1.5 billion users every month. That means Google search is bringing generative AI to more people than any other product in the world.

Introduction of Google Beam

Today we are ready to announce our next chapter. Introducing Google Beam, a new AI first video communications platform. Beam uses a new state-of-the-art video model to transform 2D video streams into a realistic 3D experience. Behind the scenes, an array of six cameras captures you from different angles. And with AI, we can merge these video streams together and render you on a 3D light field display with near perfect headtracking down to the millimetre and at 60 frames per second, all in real time. The result, a much more natural and deeply immersive conversational experience.

Google Beam Rollout

We are so excited to bring this technology to others. In collaboration with HP, the first Google Beam devices will be available for early customers later this year.

Starline Tech in Google Meet

Over the years, we’ve been bringing underlying technology from Starline into Google Meet. That includes real time speech translation to help break down language barriers.

Real-Time Translation in Meet

Today we are introducing this real-time speech translation directly in Google Meet. English and Spanish translation is now available for subscribers with more languages rolling out in the next few weeks.

Project Astra and Gemini Live

Another early research project that debuted on the IO stage was Project Astra. It explores the future capabilities of a universal AI assistant that can understand the world around you. We are starting to bring it to our products today. Gemini Live as Project Astra’s camera and screen sharing capabilities so you can talk about anything you see. People are using it in so many ways, whether practicing for a job interview or training for a marathon. We are rolling this out to everyone on Android and iOS starting today.

Project Mariner and Agentic Capabilities

Next, we also have our research prototype, Project Mariner. It’s an agent that can interact with the web and get stuff done. Project Mariner was an early step forward in testing computer use capabilities. We released it as an early research prototype in December, and we’ve made a lot of progress since. First, we are introducing multitasking and it can now oversee up to 10 simultaneous tasks. Second, it’s using a feature called teach and repeat. This is where you can show it a task once and it learns a plan for similar tasks in the future.

Developer Access to Mariner

We are bringing project Mariner’s computer use capabilities to developers via the Gemini API. Trusted testers like Automation Anywhere and UiPath are already starting to build with it, and it will be available more broadly this summer and we are starting to bring agentic capabilities to Chrome search and the Gemini app.

Agent Mode in Gemini

Let me tell you what we are excited about in the Gemini app. We call it agent mode. Say you want to find an apartment for you and two roommates in Delhi. You’ve each got a budget of $1,200 a month. You want a washer/dryer or at least a laundry nearby.

Normally, you’d have to spend a lot of time scrolling through endless listings. Using agent mode, the Gemini app goes to work behind the scenes. It finds listings from sites like Zillow that match your criteria and uses Project Mariner when needed to adjust very specific filters.

If there’s an apartment you want to check out, Gemini uses MCP to access the listings and even schedule a tour on your behalf. An experimental version of the agent mode in the Gemini app will be coming soon to subscribers.

Personalization Through Context

The best way we can bring research into reality is to make it really useful in your own reality. That’s where personalization will be really powerful. We are working to bring this to life with something we call personal context. With your permission, Gemini models can use relevant context across your Google apps in a way that is private, transparent, and fully under your control.

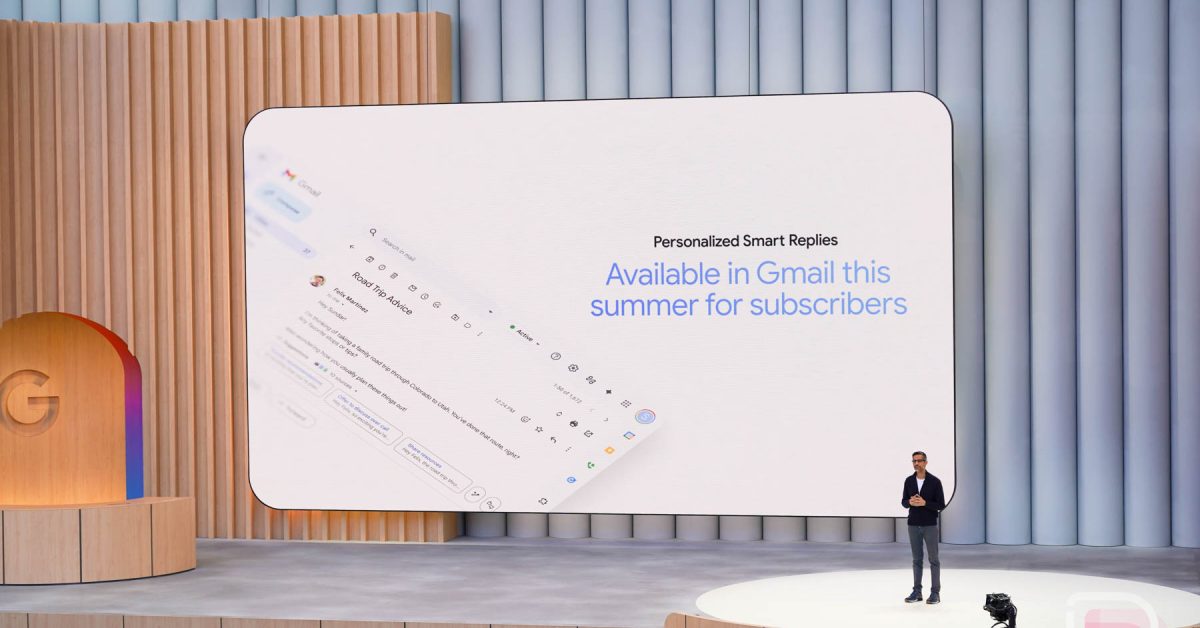

Personalized Smart Replies in Gmail

You might be familiar with our AI powered smart reply features. It’s amazing how popular they are. Now, imagine if those responses could sound like you. That’s the idea behind personalized smart replies. Let’s say my friend wrote to me looking for advice. He’s taking he’s taking a road trip to Utah, and he remembers, “I did this trip before.” Now, if I’m being honest, I would probably reply something short and unhelpful.

But with personalized smart replies, I can be a better friend. That’s because Gemini can do almost all the work for me. Looking up my notes and drive, scanning past emails for reservations, and finding my itinerary in Google Docs, trip to Zion National Park.

Gemini matches my typical greetings from past emails. captures my tone, style, and favourite word choices, and then it automatically generates a reply. I love how it included details like keeping driving time under 5 hours per day, and it uses my favourite adjective, exciting. This will be available in Gmail this summer for subscribers.

Introducing Gemini Flash

We’re living through a remarkable moment in history where AI is making possible an amazing new future. Gemini Flash is our most efficient workhorse model. It’s been incredibly popular with developers who love its speed and low cost.

Today, I’m thrilled to announce that we’re releasing an updated version of 2.5 Flash. The new Flash is better in nearly every dimension, improving across key benchmarks for reasoning, code, and long context. In fact, it’s second only to 2.5 Pro on the LM Arena leaderboard. I’m excited to say that Flash will be generally available in early June with Pro soon after.

Developer Tools and Text-to-Speech

I’m so excited to share the improvements we’re creating to make it easier for developers like all of you to build with Gemini 2.5. In addition to the new 2.5 Flash that Demis mentioned, we are also introducing new previews for text to speech. These now have a first-of-its-kind multitasker support for two voices built on native audio output.

This means the model can converse in more expressive ways. It can capture the really subtle nuances of how we speak. It can even seamlessly switch to a whisper like this. This works in over 24 languages and it can even easily go between languages.

So the model can begin speaking in English but then switch back all with the same voice. That’s pretty awesome, right? You can use this text-to-speech capability starting today in the Gemini API.

Reimagining Google Search with AI Mode

No product embodies our mission more than Google search. For those who want an end-to-end AI search experience, we are introducing an all-new AI mode. It’s a total reimagining of search. With more advanced reasoning, you can ask AI mode longer and more complex queries like this. In fact, users have been asking much longer queries, two to three times the length of traditional searches, and you can go further with follow-up questions. All of this is available today as a new tab right in search.

Visual and Conversational Search

With AI mode, you can ask whatever is on your mind. And as you can see here, search gets to work. It generates your response, putting everything together for you, including links to content and creators you might not have otherwise discovered and merchants and businesses with useful information like ratings.

Search uses AI to dynamically adapt the entire UI, the combination of text, images, links, even this map just for your question. And you can follow up conversationally. Now, AI mode isn’t just giving you information. It’s bringing a whole new level of intelligence to search.

Search Live with Project Astra

Now, we’re taking the next big leap in multimodality by bringing Project Astra’s live capabilities into AI mode. Think about all those questions that are so much simpler to just talk through and actually show what you mean, like a DIY home repair, a tricky school assignment, or learning a new skill. We call this search live. And now using your camera, search can see what you see and give you helpful information as you go back and forth in real time. It’s like hopping on a video call with search.

Visual Shopping and Agentic Checkout

With AI mode, we’re bringing a new level of intelligence to help you shop with Google. I’m faced with the classic online shopping dilemma. I have no clue how these styles will look on me. So, we are introducing a new try on feature that will help you virtually try on clothes so you get a feel for how styles might look on you.

I click on this button to try it on. It asks me to upload a picture which takes me to my camera roll. I have many pictures here. I’m going to pick one that is full length and a clear view of me and off it goes. We need a deep understanding of the human body and how clothing looks on it. To do this, we built a custom image generation model specifically trained for fashion. Wow. And it’s back. So, I’m now set on the dress.

Read about Motorola Edge 60 Fusion vs vivo T4 Comparison

Agentic Checkout and Price Tracking

Search can help me find it at the price that I want and buy it for me with our new agentic checkout feature. So, let me get back here to the dress. And um I’m going to click this thing to track price. I pick my size. Then I have to set a target price. I’m going to set it to about $50. And tracking is happening.

Search will now continuously check websites where the dress is available and then let me know if the price drops. When that happens, I get a notification just like this. And if I want to buy, my checkout agent will add the right size and colour to my cart with just one tap. Search securely buys it for me with Google Pay. And of course, all of this happen under my guidance. Our new visual shopping and agentic checkout features are rolling out in the coming months. And you can start trying on looks in labs beginning today.

Opening and Key Highlights of Google Beam

Opening and Key Highlights of Google Beam